what is the best practice to add authentication and authorization to servicepulse? We are hosting in K8s OpenShift

@wsmelton That’s definitely something we’d like to provide someday. I don’t know when it will happen. ServiceControl on containers is still very new and we need to do more analysis of different deployment strategies before we can “productize” deployment best practices in the form of a Helm chart.

We do now have a Kubernetes example in the PlatformContainerExamples repo. That’s where we recommend starting for now.

@stesme-delen This is highly dependent on your environment. We added the reverse proxy feature to the ServicePulse container so that you could take advantage of whatever your container orchestration environment provides for authenticating an ingress. If more than that is needed, you’ll ultimately need to create your own reverse proxy that authenticates however you need to in your environment, and then proxies to the ServicePulse container.

@stesme-delen I ended up using something called oauth2-proxy to perform the authentication with service-pulse

The docs mostly (all) mention using the particular/servicecontrol-ravendb Docker Image.

Is using a hosted RavenDB instance (eg. RavenDB Cloud - RavenDB NoSQL Database) an option or does this introduce too much latency, …?

PS: excellent work already!

Hi, We’re hosting on K8s. To make the setup HA, which services can I run with multiple replicas and how many? And for deployment strategy, I can use RollingUpdate everywhere?

Keep up the good work!

@janv8000 It’s not currently, because we are using the RavenDB 5.4 client at the moment and actively blocking the app from starting if you’re talking to a RavenDB 6.x server, which is (I think) all you can get in RavenDB Cloud.

But we’re currently working on updating to RavenDB 6 so stay tuned…

@stesme-delen With the currently released containers, we don’t support any sort of high-availability scenario at either the database or the application level. That may change at the database level in the future, but at the application level we have a large number of architectural challenges standing in the way of those types of features.

The safest deployment strategy would be Recreate to ensure that only one instance is talking to a database at a time.

@DavidBoike, is there any way to install ServiceControl and servicePulse on Linux without containers?

@Sean_Farmar No we don’t ship the Linux binaries separately, only as containers.

What scenario are you thinking of where you would even want something like that?

@bording We are deploying on bare metal, not on cloud(single-board computers, like Raspberry Pi).

We are limited in resources, so we wanted a lightweight solution to deploy ServiceControl without adding docker support.

Make sense?

Maybe I’m overthinking this, and docker is the simplest solution.

I think it does make sense to investigate using docker for this first. Overall it’s going to be a smoother experience to install and run ServiceControl as a container, then say having to curl a tar.gz file, extract it, and then set it up as a systemd daemon.

Docker has been our focus for Linux support so far, so we’d really need some evidence of it being unworkable before we’d look at adding other options.

Thanks for this @bording. We will do a test and see how it goes ![]()

We just released ServiceControl 6.0.0 that uses RavenDB 6.2.

@janv8000 and everyone else, this version also officially supports configuring your container to connect to an externally provided RavenDB server. However, to ensure compatibility between the client in ServiceControl and the server we do check to make sure you are connecting to a RavenDB server version greater than or equal to 6.2. This means that if you want to use RavenDB Cloud it will work.

Hi Bob, we have an issue with the /var/lib/ravendb/data mount.

We use k8s.

We run the ravendb with user uid=999(ravendb) gid=999(ravendb) groups=999(ravendb)

When mounting the volume, we make sure we specified spec:securityContext:fsGroup: 999

Doing this will mount the volume as group 999(ravendb), but the user remains root!

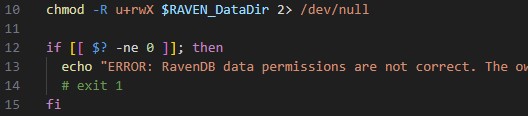

In the sc-container-startup.sh, there is this “chmod -R u+rwX …” which fails because the owner is root, but the script is run with 999(ravendb).

Can you remove that chmod line please? Thx!

That line in the script is to help you conform with the requirements stated by the Hibernating Rhinos image our image is based from.

Is there a reason you are only changing the group and not the user as well? The container is run as 999, so why would you keep the user owner of the files as root? Answering this may help us understand your request better.

Hi Bob, in K8s, Volumes are created in containers with root:root ownership by default.

There is an option ‘securityContext:fsGroup’ to define the filesystem group ID that owns the volume. There is no such thing to specify your user-owner, so it will always be set to root. Changing this would require root permissions.

I commented the ‘exit 1’ line so it only echo’s the error and continues running.

The RavenDb runs just fine and the data-files are created with owner ravendb:ravendb.

FYI, we just released version 6.1.0 which removes the permission check, and only checks for the volume mount location. As it turns out all the different container hosting environments aren’t quite as aligned in how they handle file permissions as the Docker marketing would have you believe.

With the release of version 6.1.0, we’re considering the effort to deliver ServiceControl Linux containers complete.

Check out our final comment on the roadmap issue for a summary of improvements we’ve been able to make, thanks to help and feedback from all of you. Thank you for your part in making ServiceControl better!

In the future, it would be better to redirect feedback to more appropriate/permanent channels:

- Problems can be raised as a support request or as a GitHub bug report

- Feature requests can be raised as a GitHub issue