Hello,

I’ve been using NServiceBus endpoints with a RabbitMQ transport layer for about 3 years now and never had a problem like this before. Before the problem began, I added some new event handlers and sagas to my service, so I’m wondering if it’s possible for a bad saga implementation to cause this kind of problem.

When another endpoint publishes an event that endpoint A is subscribed to, the message is added to endpoint A’s “ready” message queue as expected. However, in most cases, this message will now be removed from endpoint A’s “ready” message queue without being ACKed (in my debugging, the message handler is never hit). When endpoint A becomes inactive, this same message is restored to endpoint A’s “ready” message queue only to–once again–be removed from the “ready” queue without being ACKed when endpoint A becomes active again.

Here’s what this looks like in RabbitMQ:

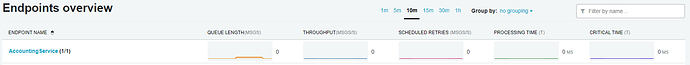

Here’s what this looks like in ServicePulse:

Long story short, I have messages that will appear, disappear, reappear, and disappear again (ad infinitum) from my endpoint’s message queue without ever being handled or ACKed. The message that I used in this example is unrelated to any of the event handlers or sagas that I added to this service, and the message never gave me troubles before.

I’m hoping someone can point me in the right direction to finding the cause of this. I’ve updated my ServiceControl, ServicePulse, and RabbitMQ to the latest versions.

Thank you,

John