per @andreasohlund request to move here from Gitter for wider discussion. Forgive the novel as I am just getting this thread caught up with the Gitter discussion.

NSB 7.0 w/RabbitMQ Transport using Conventional Topology

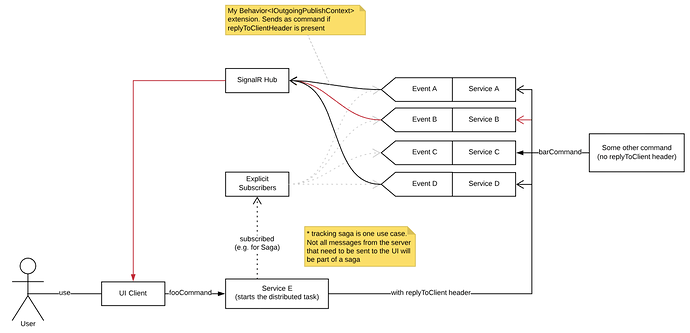

In regards to Forwarding Events to UI Problem Statement.md · GitHub

Per suggestion, I’ve removed the inheritance and have instead plugged into the pipeline to create the behavior of “copying” the message to an endpoint specified in a custom header, if it is present. This approach is a little less obtrusive in that I don’t have to tag my events with a marker interface (e.g. IUpdateUi).

To summarize the problem domain

Right now what happens is that any downstream service that completes a process can publish an event (e.g. UserCreated or CreateUserFailed) to the bus and any service that is interested in that particular event can subscribe to it.

If the task being sent into the system starts with a client (e.g. an application UI), then that client may want to receive feedback asynchronously from any services involved in that transaction. For example, the UI asks it to create a user. If something in that chain fails, then our Saga will have to handle what to do, but if it completely fails, for example, then the UI needs to know so that it can let the user know that the user was not created. That way our UX might be able to do something like pop up a toast notification that takes them to a screen with the original information for them to correct and try again, or something along those lines. Whatever our UX team decides is appropriate.

My current implementation (currently in contention) of the suggestion is to hook into the Publish pipeline.

Where we’re at in the conversation:

My current implementation of the suggestion is to hook into the Publish pipeline. When the UI sends a command to our Gateway, we create the command and Send it on the bus, but we also add in some custom headers: UserId, TaskId, and ResponseEndpoint. That response endpoint is actually a SignalR message hub that is set up as an NSB endpoint.

I implemented Behavior<IOutgoingPublishContext> and inserted it into the pipeline:

public class ForwardToResponseHub : Behavior<IOutgoingPublishContext>

{

private readonly string _destinationHeader;

public const string Description = "Copy events to an endpoint as a command";

public ForwardToResponseHub(string destinationHeader)

{

_destinationHeader = destinationHeader;

}

public override async Task Invoke(IOutgoingPublishContext context, Func<Task> next)

{

if (context.Headers.TryGetValue(_destinationHeader, out var responseHub))

{

// BUG? Context isn't maintaining headers so I'm manually copying them over

var options = new SendOptions();

options.SetDestination(responseHub);

foreach (var header in context.Headers)

{

if (header.Key.StartsWith("Arsenal"))

options.SetHeader(header.Key, header.Value);

}

// I'm using a special message to wrap this because the original message is an event

// but the reply goes to a specific address which is a command. If you try to "send"

// an event, it will throw. By wrapping the message content in a Command, we avoid that

var messageJson = JsonConvert.SerializeObject(context.Message.Instance);

await context

.Send<ResponseHubReply>(c => { c.Message = messageJson; }, options)

.ConfigureAwait(false);

}

await next().ConfigureAwait(false);

}

}

The thought behind this (which is currently in contention) is that as the services publish those events that something happened (e.g. UserCreated or CreateUserFailed), this forwarder checks to see if there is a ResponseEndpoint in the headers. If so, it converts it to a command and sends it straight to the endpoint indicated in the header which is, in this case, the SignalR hub. That hub then uses the remaining headers (UserId and TaskId) to blindly forward those messages to the user that started the task.

Here’s the current state of my implementation:

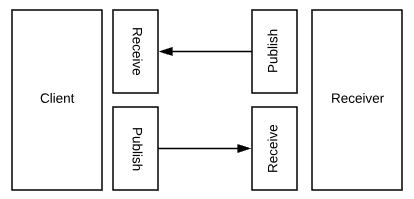

The point of contention provided by @andreasohlund is that this should probably be done in the receive pipeline which I’m having a hard time understanding at the moment:

Feels off that you you send the responses as part of publishing. What if you receive a message that should receive UI feedback that it was complete but it would lets say just update a DB, in that scenario you would not send the feedback message.

I thought doing it in the publish pipeline made sense because essentially what I’m doing is splitting the message; the event goes to the bus but it also goes direct to the indicated endpoint. The event goes out to the bus as per usual so that any explicit subscriptions are fulfilled (e.g. Sagas), but I also ensure that the message goes straight into the SignalR hub’s queue. Any other service involved in the transaction would do the same; any events that came out of their operation would make it back to the UI.

@andreasohlund, you’re suggesting that I do it in the receive pipeline which I’m having trouble understanding. The receive pipeline would be a receiver of the event, correct? If nothing else is subscribed directly to that event then, if I’m understanding correctly, that pipeline would never be touched and thus the message would never make it to SignalR.

I thought the original suggestion was to remove the Inheritance approach so that the SignalR hub didn’t have to subscribe to IUpdateUi. I liked that suggestion because I felt it was a little less obtrusive.

I think think that even if the message is just that a database was updated (e.g. event = UserUpdated), I would still send that feedback to the UI. Why? Maybe they have a spinner on the page and that was the event we decided would indicate that the operation was complete (in the case where there is no associated Saga to give a more precise event, for example). That event would tell them to remove the spinner.

I might have missed something but it seems to me that it’s the successfull processing of the message coming from the UI that is the important part here?

It is, but for our project, that can get more complex than only alerting success or only alerting failure. Here are some points I was thinking of:

- The UI wants to know about success. Just tell the UI if something is done

- Simple tasks that are not part of a Saga

- Distributed tasks that are part of a Saga (many events)

- Maybe the UI wants to show progress (imagine a % indicator or a checklist). For that, they would be interested several events for a single task

- This could be useful for troubleshooting. If the UI kept a checklist and those items were checked off as those events came rolling in for that taskId, those that failed could turn to a Red X or something and we’d know exactly what step it failed on.

- The UI wants to know about failure

- An error comes out for a task the UI is waiting on (I use the term ‘waiting’ loosely) because of something the user can fix. Show a toast notification that an error happened. Click on it to go back to a page that will allow them to correct something and resubmit.

- An error comes out for a task the UI is waiting on and it’s not something the user can fix. At least notify them so that they know the task was not completed.

/discuss ![]()

![]()