Disclaimer : We’re getting into territory of domains and architecture. Technical questions like “How do I route MessageX to endpointY” or “How do I set up logging” are much, much easier to answer. These domain and architectural questions suffer from assumptions and misinterpretations that can lead to misunderstanding. I apologize if I understand or assume something incorrectly.

Now allow me to ask some questions

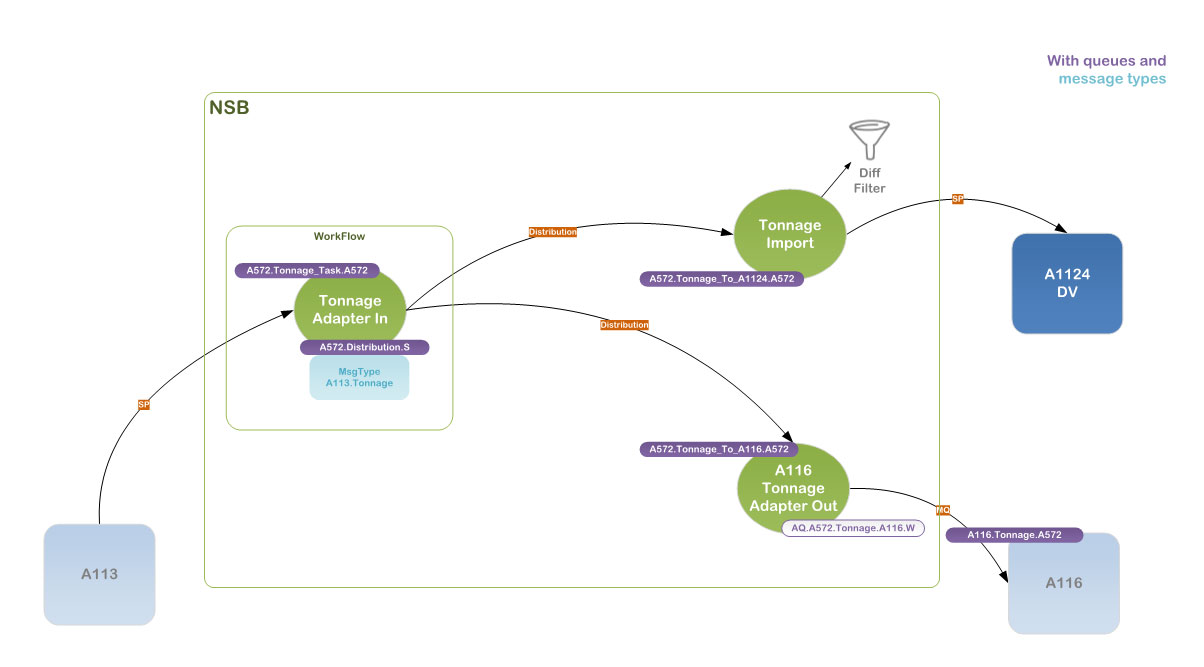

- In the conceptual view you’re showing solutions centers and a more detailed view of the real time flows under the transport solutions center. Does

MOM has something to do with messaging or a servicebus?

- Do different flows inside solutions centers send messages to each other?

- You mention “green balls”, “violets” are queues, producers and more. What I don’t understand from the concrete example. But the

Tonnage Adapter In already has two queues?

- What is

A113 and what does SP mean?

- What do you mean by mentioning

distribution? Does it mean you do data distribution? Is it just sending a message (either command or event) to the other endpoints?

I’m also not sure if I made the following terms clear.

Handler

This is the easiest one, a handler processes a message. Technically, we usually have a single handler in a single class. So that would result in something like the following pseudo code:

public class OrderReceivedHandler : IHandleMessages<OrderReceived>

{

public Task Handle(OrderRecevied message)

{

// Some code

}

}

However we can have

- Multiple handlers inside a single class

- Multiple handlers inside different classes for the same message-type.

Endpoint

An Endpoint is basically a container for one or more handlers, sagas, etc. We talk about logical endpoint during design and development. We talk about physical endpoint or endpoint instance after deploying them. You start out with a single endpoint instance, but if you want to scale out or achieve high availability, you might have multiple instances of the same logical endpoint.

The most common scenario is hosting multiple handlers inside a single logical endpoint. A less common scenario is using a single logical endpoint for all handlers. No customer that I am aware of, is using a single logical endpoint for every single handler.

Every endpoint has its own queue. By default the name of a logical endpoint is the name of the queue, although specific transports might adjust the physical name as they see fit. Thus if you have multiple endpoint instances, they all read from the same queue. The only exception is MSMQ, because technically it works differently.

An endpoint is hosting agnostic.

Host

A host is something that enables an endpoint to function. We can have a Windows Service as a host, or a simple console application. We can also use Azure Service Fabric or IIS (a web application) to host our endpoints.

As far as I know, almost all customers use a single host per logical endpoint, not taking scaling into account. This means hosting multiple endpoints per host is rarely seen in the wild. The biggest reason is that it’s technically more complex and usually doesn’t have any benefit.

That being said, I’ve seen customers with dozens of hosts, all hosting a single logical endpoint. All having multiple handlers, except for maybe only a few. So (hypothetically) if a customer has 250 different message types (which is a large number!!!) and you put 10 handlers inside an endpoint, you’d end up with 25 physically deployed endpoints. Assuming a single handler per message type and no scaling out. That’s not an odd scenario, besides the fact that you might need to convince operations

Some remarks

It was mentioned by @macdonald-k that he uses

one Windows Service for each logical functionality grouping

Does this mean you have multiple handlers inside a logical endpoint, or something else?

You mentioned “per domain” and in my experience you can easily start out with a single logical endpoint per domain, but it’s definitely not uncommon to have several endpoints per domain. It also depends on consistency, scaling and other requirements.

Also @macdonald-k mentioned

and I can spin up other instances of the service, and indeed even skip scaling out specific endpoints if needed, to scale.

We sometimes see customers with a large number of handlers in a single endpoint, requesting information on how to scale out. Our first suggestion is to not have too many handlers inside a single endpoint. That’s the first and easiest thing to scale out.

Obviously having a single handler in every logical endpoint is theoretically the best way to achieve this, but in practice this results in a maintenance nightmare.

with events, as their routing is managed by the NSB framework, as far as I understand.

It depends on the queuing technology (transport) being used, but that’s not the view you should have on it.

You send messages from one handler to another handler. Because handlers are inside endpoints, we usually say endpoints send messages to other endpoints. But since HandlerA could send a message to HandlerB and both could be inside the same endpoint, it gets kind of fuzzy.

But since handlers are inside endpoints and endpoints and queues have a 1-on-1 relationship, we still talk about it this way.

Commands

One endpoint is responsible for and the owner of a command. Other endpoints send the command to this specific endpoint that is the owner. So if the Sales endpoint is responsible for AcceptOrder command, in routing this looks like this:

var transport = endpointConfiguration.UseTransport<MyTransport>();

var routing = transport.Routing();

routing.RouteToEndpoint(assembly: typeof(AcceptOrder).Assembly, destination: "Sales");

Events

If Sales now publishes the event OrderAccepted, it just publishes it and has no idea who is responding.

If other endpoints are interested in this event, they register themselves as interested using this:

var transport = endpointConfiguration.UseTransport<MyTransport>();

var routing = transport.Routing();

routing.RegisterPublisher(assembly: typeof(OrderAccepted).Assembly, publisherEndpoint: "Sales");

When you’re using a transport that natively supports pub/sub, you don’t have to do the above. RabbitMQ is one of those transports.

Subsets of domain messages

If you have subsets of domain messages, you can do something like the following:

// Register single type

routing.RegisterPublisher(typeof(OrderAccepted), "Sales");

// Register all types inside an assemly

routing.RegisterPublisher(typeof(OrderAccepted).Assembly, "Sales");

// Register all types inside a namespace

routing.RegisterPublisher(typeof(OrderAccepted).Assembly, typeof(OrderAccepted).Namespace, "Sales");

routing.RegisterPublisher(typeof(OrderAccepted).Assembly, "Sales.Events", "Sales");

I hope this helps clarify things a bit. Let me know if you need more information.

)

)