We recently kicked off a saga that processes a file where each line in the file is a financial transaction to be processed. The initial saga fires a message for each line in the file and each of these messages starts a new, different type of saga, to process individual records from the file.

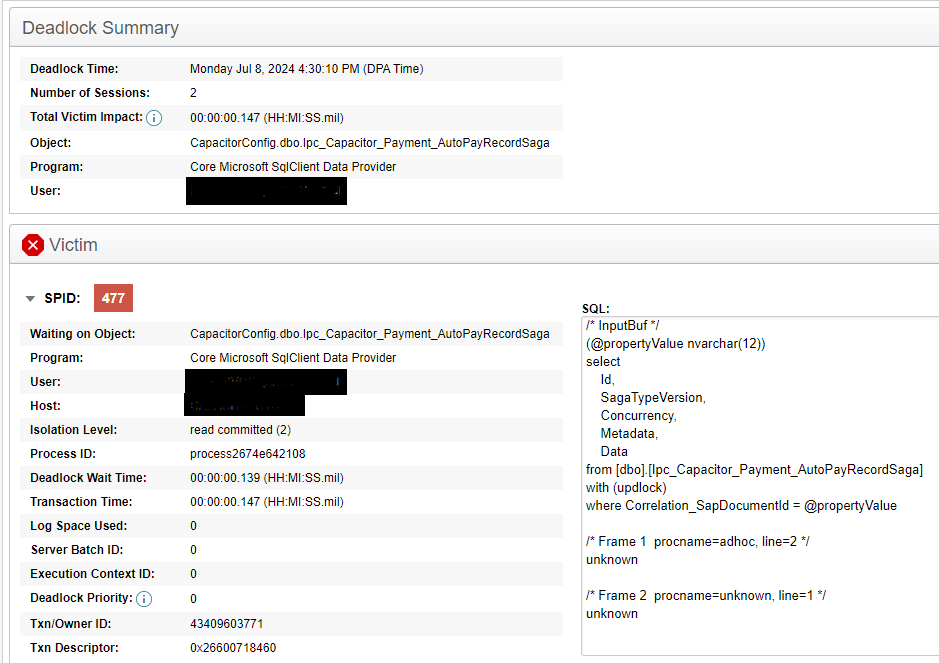

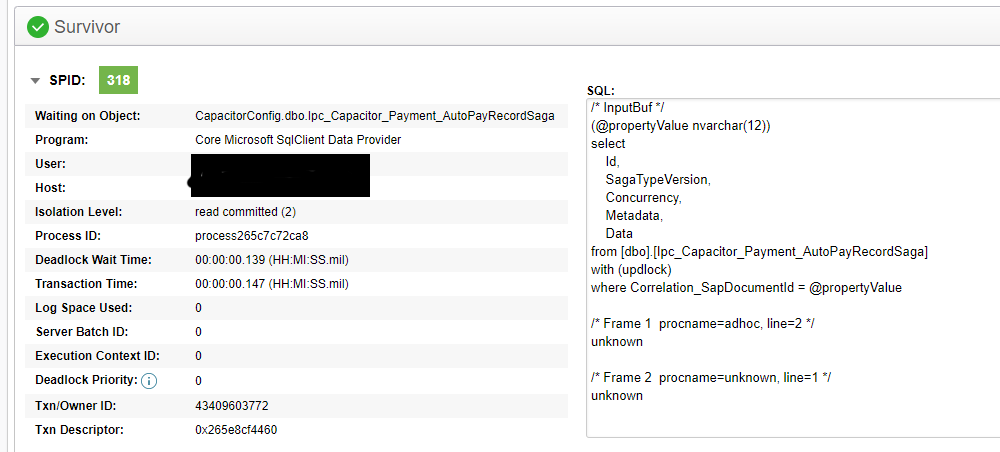

Early on in the process we got about 10 failures in the record level saga due to a database deadlock while trying to find the saga. Our database is SQL server and our message transport is Azure Service Bus. The stack trace is below. We have separate databases for saga persistence and our business (transactional) data and we do not use distributed transactions.

The good news is that the logs indicate the messages were re-tried and everything turned out okay but we would like to understand why the deadlocks happened.

Timestamp=2024-07-08 16:30:21|Level=Information|Message=Immediate Retry is going to retry message ‘6ec9b341-3b64-47ad-9f25-b1a70172c24e’ because of an exception:|TraceId=|Exception=Microsoft.Data.SqlClient.SqlException (0x80131904): Transaction (Process ID 477) was deadlocked on lock resources with another process and has been chosen as the deadlock victim. Rerun the transaction.

at Microsoft.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action1 wrapCloseInAction) at Microsoft.Data.SqlClient.SqlInternalConnection.OnError(SqlException exception, Boolean breakConnection, Action1 wrapCloseInAction)

at Microsoft.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj, Boolean callerHasConnectionLock, Boolean asyncClose)

at Microsoft.Data.SqlClient.TdsParser.TryRun(RunBehavior runBehavior, SqlCommand cmdHandler, SqlDataReader dataStream, BulkCopySimpleResultSet bulkCopyHandler, TdsParserStateObject stateObj, Boolean& dataReady)

at Microsoft.Data.SqlClient.SqlDataReader.TryHasMoreRows(Boolean& moreRows)

at Microsoft.Data.SqlClient.SqlDataReader.TryReadInternal(Boolean setTimeout, Boolean& more)

at Microsoft.Data.SqlClient.SqlDataReader.ReadAsyncExecute(Task task, Object state)

at Microsoft.Data.SqlClient.SqlDataReader.InvokeAsyncCall[T](AAsyncCallContext1 context) --- End of stack trace from previous location --- at SagaPersister.GetSagaData[TSagaData](SynchronizedStorageSession session, String commandText, RuntimeSagaInfo sagaInfo, ParameterAppender appendParameters) in /_/src/SqlPersistence/Saga/SagaPersister_Get.cs:line 104 at SagaPersister.Get[TSagaData](String propertyName, Object propertyValue, SynchronizedStorageSession session, ContextBag context) in /_/src/SqlPersistence/Saga/SagaPersister_Get.cs:line 32 at NServiceBus.PropertySagaFinder1.Find(IBuilder builder, SagaFinderDefinition finderDefinition, SynchronizedStorageSession storageSession, ContextBag context, Object message, IReadOnlyDictionary2 messageHeaders) in /_/src/NServiceBus.Core/Sagas/PropertySagaFinder.cs:line 43 at NServiceBus.SagaPersistenceBehavior.Invoke(IInvokeHandlerContext context, Func2 next) in //src/NServiceBus.Core/Sagas/SagaPersistenceBehavior.cs:line 164

at Ipc.Util.Capacitor.Behaviors.IncomingLoggingBehavior.Invoke(IInvokeHandlerContext context, Func1 next) at NServiceBus.LoadHandlersConnector.Invoke(IIncomingLogicalMessageContext context, Func2 stage) in //src/NServiceBus.Core/Pipeline/Incoming/LoadHandlersConnector.cs:line 42

at NServiceBus.ScheduledTaskHandlingBehavior.Invoke(IIncomingLogicalMessageContext context, Func2 next) in /_/src/NServiceBus.Core/Scheduling/ScheduledTaskHandlingBehavior.cs:line 23 at NServiceBus.InvokeSagaNotFoundBehavior.Invoke(IIncomingLogicalMessageContext context, Func2 next) in //src/NServiceBus.Core/Sagas/InvokeSagaNotFoundBehavior.cs:line 16

at NServiceBus.DeserializeMessageConnector.Invoke(IIncomingPhysicalMessageContext context, Func2 stage) in /_/src/NServiceBus.Core/Pipeline/Incoming/DeserializeMessageConnector.cs:line 34 at ReceivePerformanceDiagnosticsBehavior.Invoke(IIncomingPhysicalMessageContext context, Func2 next) in D:\a\NServiceBus.Metrics\NServiceBus.Metrics\src\NServiceBus.Metrics\ProbeBuilders\ReceivePerformanceDiagnosticsBehavior.cs:line 27

at Ipc.Util.Capacitor.Behaviors.TraceIncomingMessagesBehavior.Invoke(IIncomingPhysicalMessageContext context, Func1 next) at NServiceBus.InvokeAuditPipelineBehavior.Invoke(IIncomingPhysicalMessageContext context, Func2 next) in //src/NServiceBus.Core/Audit/InvokeAuditPipelineBehavior.cs:line 27

at NServiceBus.ProcessingStatisticsBehavior.Invoke(IIncomingPhysicalMessageContext context, Func2 next) in /_/src/NServiceBus.Core/Performance/Statistics/ProcessingStatisticsBehavior.cs:line 32 at NServiceBus.TransportReceiveToPhysicalMessageConnector.Invoke(ITransportReceiveContext context, Func2 next) in //src/NServiceBus.Core/Pipeline/Incoming/TransportReceiveToPhysicalMessageConnector.cs:line 61

at NServiceBus.RetryAcknowledgementBehavior.Invoke(ITransportReceiveContext context, Func`2 next) in //src/NServiceBus.Core/ServicePlatform/Retries/RetryAcknowledgementBehavior.cs:line 46

at NServiceBus.MainPipelineExecutor.Invoke(MessageContext messageContext) in //src/NServiceBus.Core/Pipeline/MainPipelineExecutor.cs:line 50

at NServiceBus.TransportReceiver.InvokePipeline(MessageContext c) in //src/NServiceBus.Core/Transports/TransportReceiver.cs:line 66

at NServiceBus.TransportReceiver.InvokePipeline(MessageContext c) in //src/NServiceBus.Core/Transports/TransportReceiver.cs:line 66

at NServiceBus.Transport.AzureServiceBus.MessagePump.ReceiveMessage(CancellationToken messageReceivingCancellationToken) in //src/Transport/Receiving/MessagePump.cs:line 298

ClientConnectionId:57bdaa88-245e-4059-b2ce-d6bae25495ba

Error Number:1205,State:51,Class:13