Simplifying configuration with Azure App Configuration

When working with NServiceBus on a decent sized system, you inevitably end up with lots of endpoints and lots of configuration. In this article, I’ll show you how Azure App Configuration has vastly simplified our architecture.

Using .NET Core, typically the configuration is stored in the appsettings.json file. We use an internal helper library and some strong typed configuration to help bootstrap our NServiceBus endpoints and logging.

This is an example of what an appsettings.json file might look like for a local development environment:

{

"EndpointSettings": {

"Environment": "Dev",

"TransportConnection": "Data Source=(local);Initial Catalog=NSB;Integrated Security=True;",

"PersistenceConnection": "Data Source=(local);Initial Catalog=BusinessDatabase;Integrated Security=True;",

"ServiceControlQueue": "Particular.ServiceControl",

"ServiceControlMetricsQueue": "Particular.Monitoring",

"MonitoringEnabled": "True",

"AuditSagaChanges": "True",

"TransportType": "SQL",

"MultiTenant": "False",

"HostNameOverride": "",

"HeartbeatFrequency": 10

},

"LoggingSettings": {

"Papertrail": {

"Enabled": true,

"Server": "logs123.papertrailapp.com",

"Port": 12345,

"SyslogHostname": ""

}

}

}

For the most part, this configuration stays consistent across endpoints in a given environment, other than the PersistenceConnection which can differ per endpoint.

Until recently, all these endpoints ran as Windows services, and all the configuration was handled as part of the deployment process with Octopus Deploy. This has worked pretty well for us, however the developer experience was not perfect.

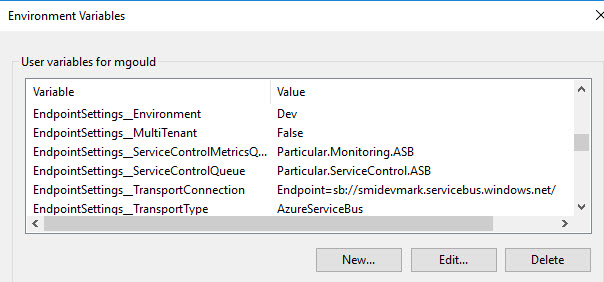

To simplify things the development experience, we moved to using the Microsoft.Extensions.Configuration.EnvironmentVariables library (Configuration in ASP.NET Core | Microsoft Learn), which as the name suggests, uses environment variables to store configuration. The base config was still loaded from appsettings.json, and then any matching environment variables would take precedence.

Now that we’re hosting some of our customers systems on Azure, we want to start taking advantage of the benefits that brings us, such as using Azure Service Bus and containers. The same endpoint code can use the SQL Transport when running on-premise, or Azure Service Bus in the cloud, by simply changing the TransportConnection and TransportType.

When running as containers, we have one image that is run across many environments, so we need a way to configure them. We’re currently running our endpoints (other than send-only website endpoints) with Azure Container Instances, and deploying using a multi-container group YAML file (Tutorial - Deploy multi-container group - YAML - Azure Container Instances | Microsoft Learn). We’re able to pass environment variables in this config, that will override any settings that might be in the containers appsettings.json. Our first attempt looked like this:

apiVersion: '2018-10-01'

location: southcentralus

name: container-group-name

properties:

containers:

- name: endpoint-name-1

properties:

environmentVariables:

- name: EndpointSettings__Environment

value: Test

- name: EndpointSettings__TransportConnection

secureValue: Endpoint=sb://SERVERNAME.servicebus.windows.net/;

- name: EndpointSettings__PersistenceConnection

secureValue: Data Source=SERVERNAME.database.windows.net;Initial Catalog=DatabaseName;

- name: EndpointSettings__ServiceControlQueue

value: Particular.ServiceControl

- name: EndpointSettings__ServiceControlMetricsQueue

value: Particular.Monitoring

- name: EndpointSettings__MonitoringEnabled

value: 'True'

- name: EndpointSettings__AuditSagaChanges

value: 'False'

- name: EndpointSettings__TransportType

value: AzureServiceBus

- name: EndpointSettings__MultiTenant

value: 'True'

- name: EndpointSettings__HostNameOverride

value: endpoint-name-1

- name: EndpointSettings__HeartbeatFrequency

value: 60

- name: LoggingSettings__Papertrail__Server

value: logs123.papertrailapp.com

- name: LoggingSettings__Papertrail__Port

value: 12345

- name: LoggingSettings__Papertrail__SyslogHostname

value: endpoint-name-1

image: registry.azurecr.io/image.name:1.2.3

This worked, and got us up and running. However, for each endpoint we added, most of that config was simply repeated. Ensuring everything stayed in sync, and having to redeploy every time we made a config change became a chore.

Enter Azure App Configuration

Azure App Configuration (currently in preview) is a service that helps you centralize your application and feature settings (Azure App Configuration documentation | Microsoft Learn).

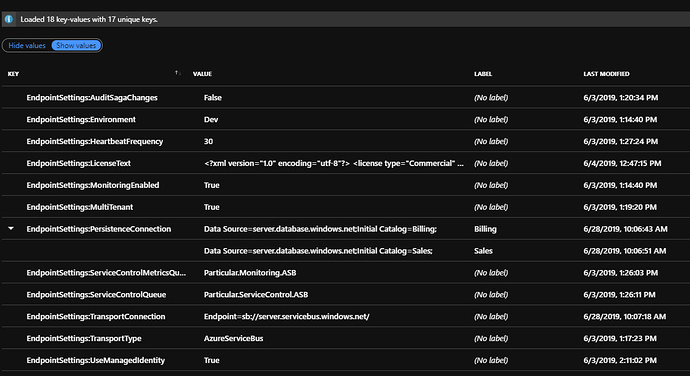

With Azure App Configuration, you simply defines keys and values, with optional labels. For us, we want separation between our environments, so we have an App Configuration resource per environment.

Building on the work we’ve done to support environment variables, I wanted to see how we could integrate our endpoints with this service. This is the code I came up with:

private static IConfigurationRoot BuildConfiguration(string endpointName)

{

var configBuilder = new ConfigurationBuilder()

.SetBasePath(Directory.GetCurrentDirectory())

.AddJsonFile("appsettings.json", optional: true, reloadOnChange: true)

.AddEnvironmentVariables();

var configuration = configBuilder.Build();

var appConfigEndpoint = configuration["AppConfigurationEndpoint"];

if (string.IsNullOrEmpty(appConfigEndpoint))

return configuration;

configBuilder.AddAzureAppConfiguration(options =>

{

options.ConnectWithManagedIdentity(appConfigEndpoint)

.Use(KeyFilter.Any)

.Use(KeyFilter.Any, labelFilter:endpointName);

});

configuration = configBuilder.Build();

return configuration;

}

The first thing we do is load configuration from appsettings.json, and environment variables like normal. We then see if a configuration item named AppConfigurationEndpoint has been set, and if so, we use the AddAzureAppConfiguration and point to that endpoint.

When we run our endpoints in Azure, we’re using Managed Identities so that we’re not keeping credentials anywhere (Managed identities for Azure resources - Microsoft Entra | Microsoft Learn). The developer experience with this is great, as Visual Studio has built in support to connect using your own developer credentials.

Those Use() method invocations you see tell the service what keys we are interested in. The first one essentially says “give me any keys with no label”, the second says “give me any keys with this label”. This means for any environment-wide settings such as the Service Control Queue and Transport Connection, this can be simply set once. Any endpoint specific settings or overrides, can be labeled with the endpoint name.

Here’s what this looks like in the Azure Portal for our two fictitious endpoints named Billing and Sales:

For a developer, all we have to do now is create an environment variable named AppConfigurationEndpoint and point it against the appropriate configuration store. For our containers, all those environment variables in the YAML file simply get reduced to this:

containers:

- name: endpoint-name-1

properties:

environmentVariables:

- name: AppConfigurationEndpoint

value: https://appconfigname.azconfig.io

image: registry.azurecr.io/image.name:1.2.3

If we make a change to the config in the portal, we simply restart the endpoint!

I hope this is useful for others!