Hi,

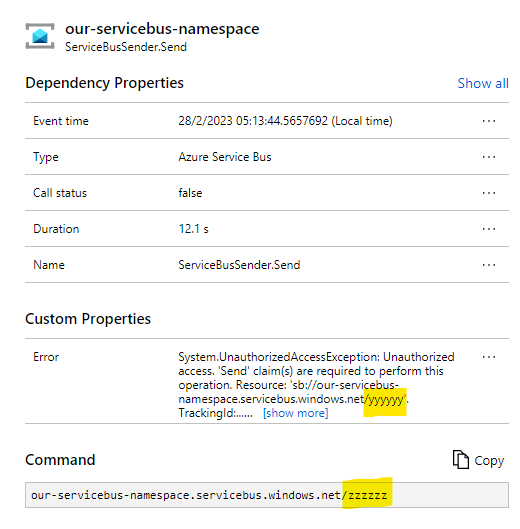

we are using NServiceBus.AzureFunctions.Worker.ServiceBus version 3.1.0 in combination with Azure.Messaging.ServiceBus version 7.11.1. This function runs on an Azure Functions Elastic Premium plan. While processing messages, we often see hundreds occurences of the same exception in a timespan of a few minutes. The messages eventually get processed.

We use the Primary Connection String (RootManageSharedAccessKey) of the Azure Service Bus Namespace. We store it in Azure Key Vault and have a Key Vault reference to it in the application settings of the Azure Function.

The full details of the exception we receive:

ExceptionType: Microsoft.Azure.WebJobs.Script.Workers.Rpc.RpcException

Method: Azure.Messaging.ServiceBus.ServiceBusProcessor+<OnProcessMessageAsync>d__104.MoveNext

OuterMessage: Exception while executing function: Functions.NServiceBusFunctionEndpointTrigger-xxxx

OuterAssembly: Microsoft.Azure.WebJobs.Extensions.ServiceBus, Version=5.7.0.0, Culture=neutral, PublicKeyToken=92742159e12e44c8

OuterMethod: Microsoft.Azure.WebJobs.ServiceBus.MessageProcessor.CompleteProcessingMessageAsync

InnermostType: Microsoft.Azure.WebJobs.Script.Workers.Rpc.RpcException

InnerMostMessage:

Result: Failure

Exception: System.AggregateException: One or more errors occurred. (Unauthorized access. 'Send' claim(s) are required to perform this operation. Resource: 'sb://xxxx.servicebus.windows.net/yyyy'. Timestamp:2023-02-07T09:15:25

For troubleshooting information, see https://aka.ms/azsdk/net/servicebus/exceptions/troubleshoot.)

---> System.UnauthorizedAccessException: Unauthorized access. 'Send' claim(s) are required to perform this operation. Resource: 'sb://xxxx.servicebus.windows.net/yyyy'. Timestamp:2023-02-07T09:15:25

For troubleshooting information, see https://aka.ms/azsdk/net/servicebus/exceptions/troubleshoot.

at Azure.Messaging.ServiceBus.Amqp.AmqpSender.SendBatchInternalAsync(AmqpMessage batchMessage, TimeSpan timeout, CancellationToken cancellationToken)

at Azure.Messaging.ServiceBus.Amqp.AmqpSender.<>c.<<SendAsync>b__24_0>d.MoveNext()

--- End of stack trace from previous location ---

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy.<>c__22`1.<<RunOperation>b__22_0>d.MoveNext()

--- End of stack trace from previous location ---

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy.RunOperation[T1,TResult](Func`4 operation, T1 t1, TransportConnectionScope scope, CancellationToken cancellationToken, Boolean logRetriesAsVerbose)

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy.RunOperation[T1,TResult](Func`4 operation, T1 t1, TransportConnectionScope scope, CancellationToken cancellationToken, Boolean logRetriesAsVerbose)

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy.RunOperation[T1](Func`4 operation, T1 t1, TransportConnectionScope scope, CancellationToken cancellationToken)

at Azure.Messaging.ServiceBus.Amqp.AmqpSender.SendAsync(IReadOnlyCollection`1 messages, CancellationToken cancellationToken)

at Azure.Messaging.ServiceBus.ServiceBusSender.SendMessagesAsync(IEnumerable`1 messages, CancellationToken cancellationToken)

at Azure.Messaging.ServiceBus.ServiceBusSender.SendMessageAsync(ServiceBusMessage message, CancellationToken cancellationToken)

at NServiceBus.TransportReceiveToPhysicalMessageConnector.Invoke(ITransportReceiveContext context, Func`2 next) in /_/src/NServiceBus.Core/Pipeline/Incoming/TransportReceiveToPhysicalMessageConnector.cs:line 61

at NServiceBus.RetryAcknowledgementBehavior.Invoke(ITransportReceiveContext context, Func`2 next) in /_/src/NServiceBus.Core/ServicePlatform/Retries/RetryAcknowledgementBehavior.cs:line 46

at NServiceBus.MainPipelineExecutor.Invoke(MessageContext messageContext) in /_/src/NServiceBus.Core/Pipeline/MainPipelineExecutor.cs:line 50

at NServiceBus.TransportReceiver.InvokePipeline(MessageContext c) in /_/src/NServiceBus.Core/Transports/TransportReceiver.cs:line 66

at NServiceBus.TransportReceiver.InvokePipeline(MessageContext c) in /_/src/NServiceBus.Core/Transports/TransportReceiver.cs:line 66

at NServiceBus.FunctionEndpoint.Process(Byte[] body, IDictionary`2 userProperties, String messageId, Int32 deliveryCount, String replyTo, String correlationId, ITransactionStrategy transactionStrategy, PipelineInvoker pipeline) in /_/src/NServiceBus.AzureFunctions.Worker.ServiceBus/FunctionEndpoint.cs:line 107

at NServiceBus.FunctionEndpoint.Process(Byte[] body, IDictionary`2 userProperties, String messageId, Int32 deliveryCount, String replyTo, String correlationId, ITransactionStrategy transactionStrategy, PipelineInvoker pipeline) in /_/src/NServiceBus.AzureFunctions.Worker.ServiceBus/FunctionEndpoint.cs:line 107

at NServiceBus.FunctionEndpoint.Process(Byte[] body, IDictionary`2 userProperties, String messageId, Int32 deliveryCount, String replyTo, String correlationId, FunctionContext functionContext) in /_/src/NServiceBus.AzureFunctions.Worker.ServiceBus/FunctionEndpoint.cs:line 42

at FunctionEndpointTrigger.Run(Byte[] messageBody, IDictionary`2 userProperties, String messageId, Int32 deliveryCount, String replyTo, String correlationId, FunctionContext context) in E:\Agent01\_work\16\s\src\backend\HR.XXXX.ServiceBus.FunctionHost\NServiceBus.AzureFunctions.Worker.SourceGenerator\NServiceBus.AzureFunctions.SourceGenerator.TriggerFunctionGenerator\NServiceBus__FunctionEndpointTrigger.cs:line 34

at Microsoft.Azure.Functions.Worker.Invocation.VoidTaskMethodInvoker`2.InvokeAsync(TReflected instance, Object[] arguments) in D:\a\1\s\src\DotNetWorker.Core\Invocation\VoidTaskMethodInvoker.cs:line 24

--- End of inner exception stack trace ---

at System.Threading.Tasks.Task.ThrowIfExceptional(Boolean includeTaskCanceledExceptions)

at System.Threading.Tasks.Task`1.GetResultCore(Boolean waitCompletionNotification)

at Microsoft.Azure.Functions.Worker.Invocation.DefaultFunctionInvoker`2.<>c.<InvokeAsync>b__6_0(Task`1 t) in D:\a\1\s\src\DotNetWorker.Core\Invocation\DefaultFunctionInvoker.cs:line 32

at System.Threading.Tasks.ContinuationResultTaskFromResultTask`2.InnerInvoke()

at System.Threading.Tasks.Task.<>c.<.cctor>b__272_0(Object obj)

at System.Threading.ExecutionContext.RunFromThreadPoolDispatchLoop(Thread threadPoolThread, ExecutionContext executionContext, ContextCallback callback, Object state)

--- End of stack trace from previous location ---

at System.Threading.ExecutionContext.RunFromThreadPoolDispatchLoop(Thread threadPoolThread, ExecutionContext executionContext, ContextCallback callback, Object state)

at System.Threading.Tasks.Task.ExecuteWithThreadLocal(Task& currentTaskSlot, Thread threadPoolThread)

--- End of stack trace from previous location ---

at Microsoft.Azure.Functions.Worker.Invocation.DefaultFunctionExecutor.ExecuteAsync(FunctionContext context) in D:\a\1\s\src\DotNetWorker.Core\Invocation\DefaultFunctionExecutor.cs:line 45

at Microsoft.Azure.Functions.Worker.OutputBindings.OutputBindingsMiddleware.Invoke(FunctionContext context, FunctionExecutionDelegate next) in D:\a\1\s\src\DotNetWorker.Core\OutputBindings\OutputBindingsMiddleware.cs:line 16

at Microsoft.Azure.Functions.Worker.GrpcWorker.InvocationRequestHandlerAsync(InvocationRequest request, IFunctionsApplication application, IInvocationFeaturesFactory invocationFeaturesFactory, ObjectSerializer serializer, IOutputBindingsInfoProvider outputBindingsInfoProvider) in D:\a\1\s\src\DotNetWorker.Grpc\GrpcWorker.cs:line 166

Stack: at System.Threading.Tasks.Task.ThrowIfExceptional(Boolean includeTaskCanceledExceptions)

at System.Threading.Tasks.Task`1.GetResultCore(Boolean waitCompletionNotification)

at Microsoft.Azure.Functions.Worker.Invocation.DefaultFunctionInvoker`2.<>c.<InvokeAsync>b__6_0(Task`1 t) in D:\a\1\s\src\DotNetWorker.Core\Invocation\DefaultFunctionInvoker.cs:line 32

at System.Threading.Tasks.ContinuationResultTaskFromResultTask`2.InnerInvoke()

at System.Threading.Tasks.Task.<>c.<.cctor>b__272_0(Object obj)

at System.Threading.ExecutionContext.RunFromThreadPoolDispatchLoop(Thread threadPoolThread, ExecutionContext executionContext, ContextCallback callback, Object state)

--- End of stack trace from previous location ---

at System.Threading.ExecutionContext.RunFromThreadPoolDispatchLoop(Thread threadPoolThread, ExecutionContext executionContext, ContextCallback callback, Object state)

at System.Threading.Tasks.Task.ExecuteWithThreadLocal(Task& currentTaskSlot, Thread threadPoolThread)

--- End of stack trace from previous location ---

at Microsoft.Azure.Functions.Worker.Invocation.DefaultFunctionExecutor.ExecuteAsync(FunctionContext context) in D:\a\1\s\src\DotNetWorker.Core\Invocation\DefaultFunctionExecutor.cs:line 45

at Microsoft.Azure.Functions.Worker.OutputBindings.OutputBindingsMiddleware.Invoke(FunctionContext context, FunctionExecutionDelegate next) in D:\a\1\s\src\DotNetWorker.Core\OutputBindings\OutputBindingsMiddleware.cs:line 16

at Microsoft.Azure.Functions.Worker.GrpcWorker.InvocationRequestHandlerAsync(InvocationRequest request, IFunctionsApplication application, IInvocationFeaturesFactory invocationFeaturesFactory, ObjectSerializer serializer, IOutputBindingsInfoProvider outputBindingsInfoProvider) in D:\a\1\s\src\DotNetWorker.Grpc\GrpcWorker.cs:line 166

Have you seen this error before? Or can you provide some guidance on where to look in order to resolve this issue?

Thanks in advance

Jeroen Janssens