I investigate more and it seems to be related when there is a ‘high’ number of updated contacts. Normally this is below 100, but I see strange things happening with one that has 670 contacts.

The logic is:

-

Endpoint1: Scheduled Azure Function send msg

CheckForUpdatedContactsevery 15min. -

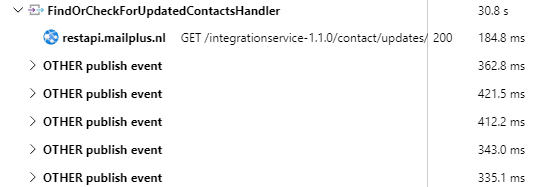

Endpoint1: FindOrCheckForUpdatedContactsHandler process the msg

a. calls webservice to find updated contacts

b. publishContactUpdatedfor each contact -

Endpoint1: Saga

SyncContactWorkflowis started byContactUpdated

a. Send ‘FindCustomerOrLeadRequest’ to Endpoint2

b. Receives ‘FindCustomerOrLeadResponse’ from Endpoint2

c. SendUpdateCustomerOrLeadRequestto Endpoint2

d. Receives ‘UpdateCustomerOrLeadResponse’ from Endpoint2

e. Completes, publishSyncContactCompleted

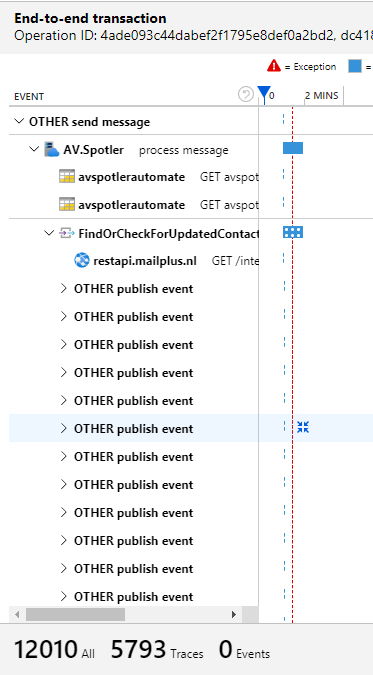

Big Dependency tree

I see a big dependency tree being build up in Application Insights because of the publish event in step 2, that is generating for each event a lot of traces from step 3:

Each publish event in step 2 should start a new conversation, but I now have this:

var options = new PublishOptions();

// options.StartNewConversation(); // doesn't work with publish

options.SetHeader(Headers.CorrelationId, Guid.NewGuid().ToString());

options.RequireImmediateDispatch();

I should have added options.SetHeader(Headers.ConversationId, Guid.NewGuid().ToString()); but looking at the tracing in Application Insights I don’t see the CorrelationId changing for each publish event. They are all the same. How do I start a new conversation for published events?

ServiceBusException

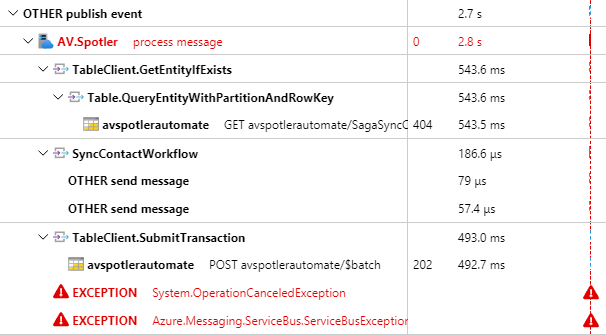

Then I see the duration to publish each event of step 2b building up from 300-400ms:

To 4-7sec:

Then after x publish events, I see the following error from ServiceBus being generated:

Azure.Messaging.ServiceBus.ServiceBusException: The operation was canceled. (ServiceCommunicationProblem). For troubleshooting information, see https://aka.ms/azsdk/net/servicebus/exceptions/troubleshoot.

---> System.OperationCanceledException: The operation was canceled.

at Microsoft.Azure.Amqp.AsyncResult.End[TAsyncResult](IAsyncResult result)

at Microsoft.Azure.Amqp.SendingAmqpLink.<>c.<SendMessageAsync>b__12_1(IAsyncResult r)

at System.Threading.Tasks.TaskFactory`1.FromAsyncCoreLogic(IAsyncResult iar, Func`2 endFunction, Action`1 endAction, Task`1 promise, Boolean requiresSynchronization)

--- End of stack trace from previous location ---

at Azure.Messaging.ServiceBus.Amqp.AmqpSender.SendBatchInternalAsync(AmqpMessage batchMessage, TimeSpan timeout, CancellationToken cancellationToken)

--- End of inner exception stack trace ---

at Azure.Messaging.ServiceBus.Amqp.AmqpSender.SendBatchInternalAsync(AmqpMessage batchMessage, TimeSpan timeout, CancellationToken cancellationToken)

at Azure.Messaging.ServiceBus.Amqp.AmqpSender.SendBatchInternalAsync(IReadOnlyCollection`1 messages, TimeSpan timeout, CancellationToken cancellationToken)

at Azure.Messaging.ServiceBus.Amqp.AmqpSender.<>c.<<SendBatchAsync>b__21_0>d.MoveNext()

--- End of stack trace from previous location ---

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy.<>c__22`1.<<RunOperation>b__22_0>d.MoveNext()

--- End of stack trace from previous location ---

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy.RunOperation[T1,TResult](Func`4 operation, T1 t1, TransportConnectionScope scope, CancellationToken cancellationToken, Boolean logRetriesAsVerbose)

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy.RunOperation[T1,TResult](Func`4 operation, T1 t1, TransportConnectionScope scope, CancellationToken cancellationToken, Boolean logRetriesAsVerbose)

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy.RunOperation[T1](Func`4 operation, T1 t1, TransportConnectionScope scope, CancellationToken cancellationToken)

at Azure.Messaging.ServiceBus.Amqp.AmqpSender.SendBatchAsync(ServiceBusMessageBatch messageBatch, CancellationToken cancellationToken)

at Azure.Messaging.ServiceBus.ServiceBusSender.SendMessagesAsync(ServiceBusMessageBatch messageBatch, CancellationToken cancellationToken)

at NServiceBus.Transport.AzureServiceBus.MessageDispatcher.DispatchBatchForDestination(String destination, ServiceBusClient client, Transaction transaction, Queue`1 messagesToSend, CancellationToken cancellationToken) in /_/src/Transport/Sending/MessageDispatcher.cs:line 189

at NServiceBus.Transport.AzureServiceBus.MessageDispatcher.Dispatch(TransportOperations outgoingMessages, TransportTransaction transaction, CancellationToken cancellationToken) in /_/src/Transport/Sending/MessageDispatcher.cs:line 92

at NServiceBus.TransportReceiveToPhysicalMessageConnector.Invoke(ITransportReceiveContext context, Func`2 next) in /_/src/NServiceBus.Core/Pipeline/Incoming/TransportReceiveToPhysicalMessageConnector.cs:line 68

at NServiceBus.RetryAcknowledgementBehavior.Invoke(ITransportReceiveContext context, Func`2 next) in /_/src/NServiceBus.Core/ServicePlatform/Retries/RetryAcknowledgementBehavior.cs:line 46

at NServiceBus.TracingExtensions.<>c__DisplayClass0_0`1.<<Invoke>g__TracePipelineStatus|0>d.MoveNext() in /_/src/NServiceBus.Core/OpenTelemetry/Tracing/TracingExtensions.cs:line 28

Then at the end of the telemetry is see the exception:

Azure.Messaging.ServiceBus.ServiceBusException:

at Azure.Messaging.ServiceBus.Amqp.AmqpReceiver+<RenewMessageLockInternalAsync>d__56.MoveNext (Azure.Messaging.ServiceBus, Version=7.8.0.0, Culture=neutral, PublicKeyToken=92742159e12e44c8)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Azure.Messaging.ServiceBus.Amqp.AmqpReceiver+<>c+<<RenewMessageLockAsync>b__55_0>d.MoveNext (Azure.Messaging.ServiceBus, Version=7.8.0.0, Culture=neutral, PublicKeyToken=92742159e12e44c8)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy+<RunOperation>d__21`2.MoveNext (Azure.Messaging.ServiceBus, Version=7.8.0.0, Culture=neutral, PublicKeyToken=92742159e12e44c8)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Azure.Messaging.ServiceBus.ServiceBusRetryPolicy+<RunOperation>d__21`2.MoveNext (Azure.Messaging.ServiceBus, Version=7.8.0.0, Culture=neutral, PublicKeyToken=92742159e12e44c8)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Azure.Messaging.ServiceBus.Amqp.AmqpReceiver+<RenewMessageLockAsync>d__55.MoveNext (Azure.Messaging.ServiceBus, Version=7.8.0.0, Culture=neutral, PublicKeyToken=92742159e12e44c8)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Azure.Messaging.ServiceBus.ServiceBusReceiver+<RenewMessageLockAsync>d__62.MoveNext (Azure.Messaging.ServiceBus, Version=7.8.0.0, Culture=neutral, PublicKeyToken=92742159e12e44c8)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Azure.Messaging.ServiceBus.ServiceBusReceiver+<RenewMessageLockAsync>d__61.MoveNext (Azure.Messaging.ServiceBus, Version=7.8.0.0, Culture=neutral, PublicKeyToken=92742159e12e44c8)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Azure.Messaging.ServiceBus.ReceiverManager+<RenewMessageLockAsync>d__18.MoveNext (Azure.Messaging.ServiceBus, Version=7.8.0.0, Culture=neutral, PublicKeyToken=92742159e12e44c8)

This customer with 670 updated contacts every x hours is a new customer around the same time we deployed NSB 8 so I can’t exclude 100% that this wouldn’t happen with NSB 7. But these numbers also happend before NSB 8 and that wasn’t an issue (at least not noticeable).

I got a feeling it is related to the telemetry, but that is just a guess. I didn’t disable this yet.

Can we improve this process or are there things we do wrong, that we can quickly fix?